Before I talk about Policy and Governance for Kubernetes let’s briefly talk about policy and governance in general. In short, it means to provide a set of rules which define a guideline that either can be enforced or audited. So why do we need this? It is important because in a Cloud ecosystem decisions are made decentralized and also taken at a rapid pace. A governance model or policy becomes crucial to keep the entire organization on track. Those definitions can include but are not limited to, security baselines or consistency of resources and deployments.

So, why do we need Governance and Policy for Kubernetes? Kubernetes provides Role-based Access Control (RBAC) which allows Operators to define in a very granular manner which identity is allowed to create or manager which resource. But RBAC does not allow us to control the specification of those resources. As already mentioned this is a necessary requirement to be able to define the policy boundaries. Some examples are:

- whitelist of trusted container registries and images

- required container security specifications

- required labels to group resources

- permit conflicting Ingress host resources

- permit publicly exposed LoadBalancer services

- …

This is where Policy and Governance for Kubernetes comes in. But let me first introduce you to Open Policy Agent. Open Policy Agent is the foundation for policy management on Kubernetes or even the whole cloud-native ecosystem

Open Policy Agent

Open Policy Agent (OPA) is an open-source project by styra. It provides policy-based control for cloud-native environments using a unified toolset and framework and a declarative approach. Open Policy Agents allows decoupling policy declaration and management from the application code by either integrating the OPA Golang library or calling the REST API of a collocated OPA daemon instance.

With this in place, OPA can be used to evaluate any JSON-based inputs against user-defined policies and mark the input as passing or failing. With this in place, Open Policy Agent can be seamlessly integrated with a variety of tools and projects. Some examples are:

- API and service authorization with Envoy, Kong or Traefik

- Authorization policies for SQL, Kafka and others

- Container Network authorization with Istio

- Test policies for Terraform infrastructure changes

- Polices for SSH and sudo

- Policy and Governance for Kubernetes

Open Policy Agent policies are written in a declarative policy language called Rego. Rego queries are claims about data stored in OPA. These queries can be used to define policies that enumerate data instances that violate the expected state of the system.

You can use the Rego playground to get familiar with the policy language. The playground also contains a variety of examples that help you to get started writing your own queries.

OPA Gatekeeper — the Kubernetes implementation

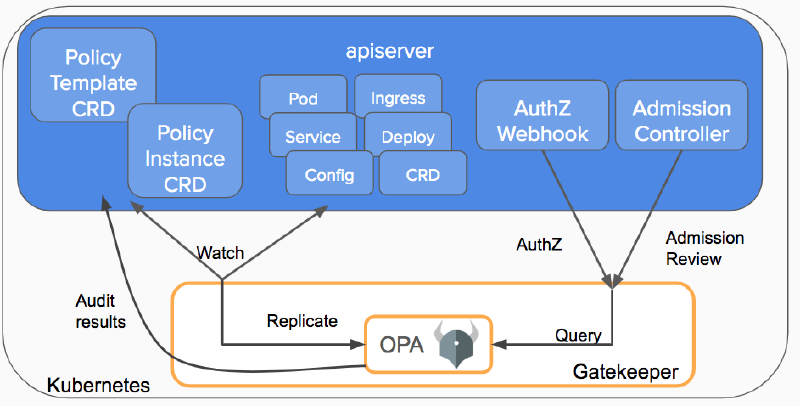

Open Policy Agent Gatekeeper got introduced by Google, Microsoft, Red Hat, and styra. It is a Kubernetes Admission Controller built around OPA to integrate it with the Kubernetes API server and enforcing policies defined by Custom Resource Definitions (CRDs).

The Gatekeeper webhook, gets invoked whenever a Kubernetes resource is created, updated, or deleted which then allows Gatekeeper to permit it. In addition, Gatekeeper can also audit existing resources. Polices, as well as data, can be replicated into the included OPA instance to also create advanced queries that for example need access to objects in the cluster other than the object under current evaluation.

Get started with Gatekeeper

This post will not cover how to install Gatekeeper. All steps as well as detailed information are available on Github.

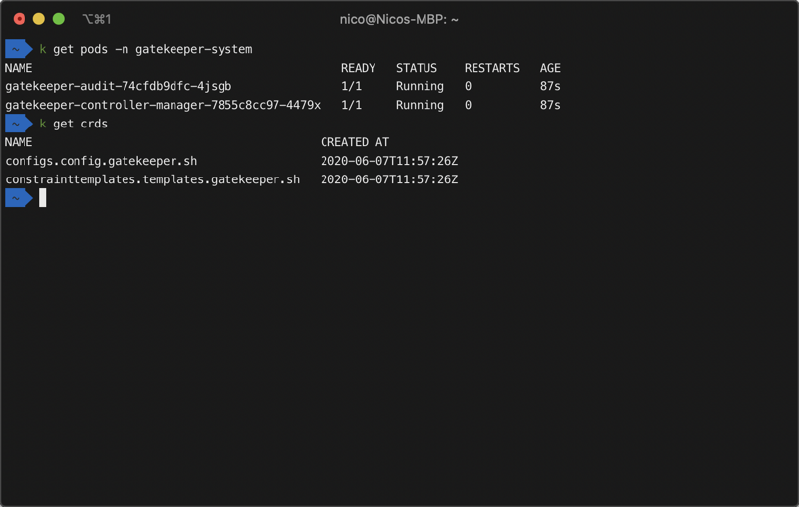

After installing Gatekeeper you are ready to define your first policies. But let’s first review what we got:

Gatekeeper consists out of two deployments:

- gatekeeper-controller-manager is the Admission Controller monitoring the Kubernetes API server for resource changes, verifying policy definitions, and permitting actions.

- gatekeeper-audit is used to verifying and auditing existing resources.

Besides these two deployments, also two Custom Resource Definitions (CRDs) got created:

- ConstraintTemplate is used to define a policy based on metadata and the actual Rego query.

- Config is used to define which resources should be replicated to the OPA instance to allow advanced policy queries.

A first example

In this example, we want to make sure that all labels required by the policy are present in the Kubernetes resource manifest. To do this, we first have to build our Rego query:

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}

It consists of a package containing a violation definition. The violation defined the input data, the condition to be matched, and a message which gets returned in case of a violation.

We now need to make Gatekeeper aware of our definition. This is done by wrapping our Rego query into a ContrainTemplate resource:

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

listKind: K8sRequiredLabelsList

plural: k8srequiredlabels

singular: k8srequiredlabels

validation:

# Schema for the `parameters` field

openAPIV3Schema:

properties:

labels:

type: array

items: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}

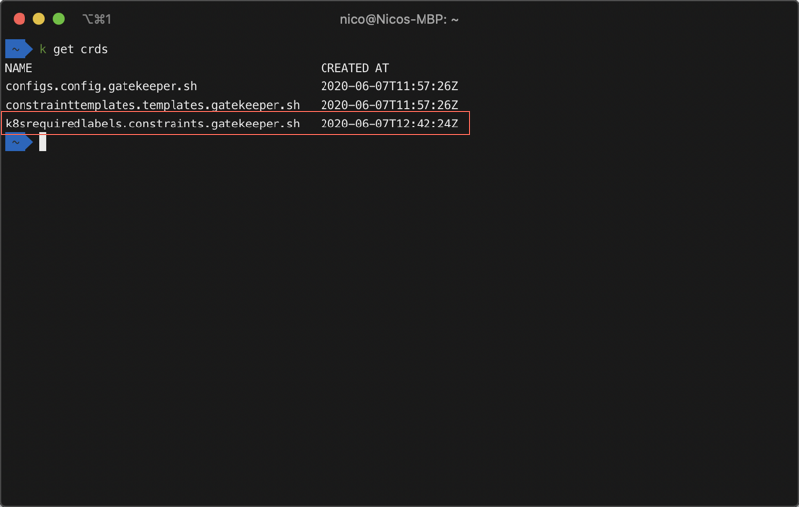

The ConstraintTemplate resource contains out of the Rego query as well as some metadata. As soon as we apply the ConstraintTemplate resource a new CRD will be created. In our case, it is called K8sRequiredLabels. This allows us to easily interact with our policy later on.

We now defined our policy but you may have noticed that we not yet defined which label we require our Kubernetes resources to have. We also did not yet define on which Kubernetes resources we like to have this policy applied too. To do so, we must first create another manifest called Constraints:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-gk

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

labels: ["gatekeeper"]

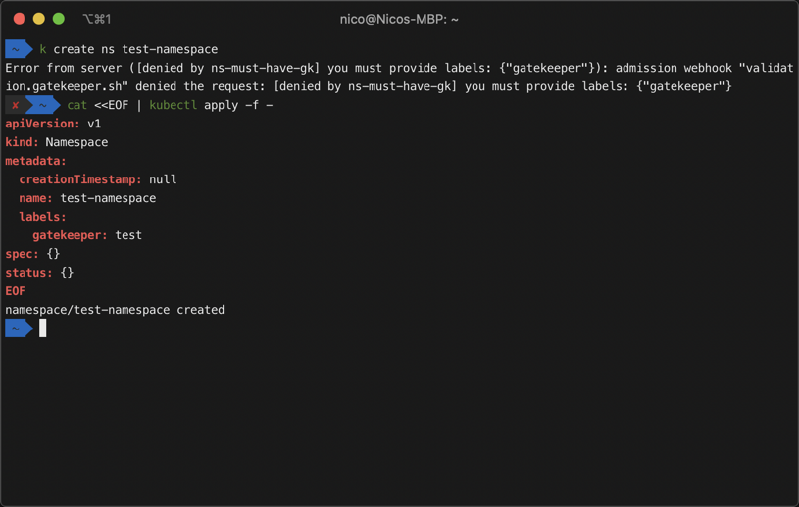

The Constraints manifest allows us to enforce our above-defined policy by providing our parameters as well as the Kubernetes resource it should act on. In our example, we want to enforce this policy for all namespace resources and make sure that they all contain a gatekeeper label.

If we now apply the above manifest, we cannot create a namespace without providing a gatekeeper label. Instead, our configured message is returned. If we provide a label the namespace gets created.

The Gatekeeper repository provides some more examples which can be used to get started.

Policy auditing

Let’s talk about auditing. Why do we need it? Well, we may have created resources before we created policies, and we would like to review them to make sure they meet our policies. Or maybe we just want to audit our cluster without enforcing the policies.

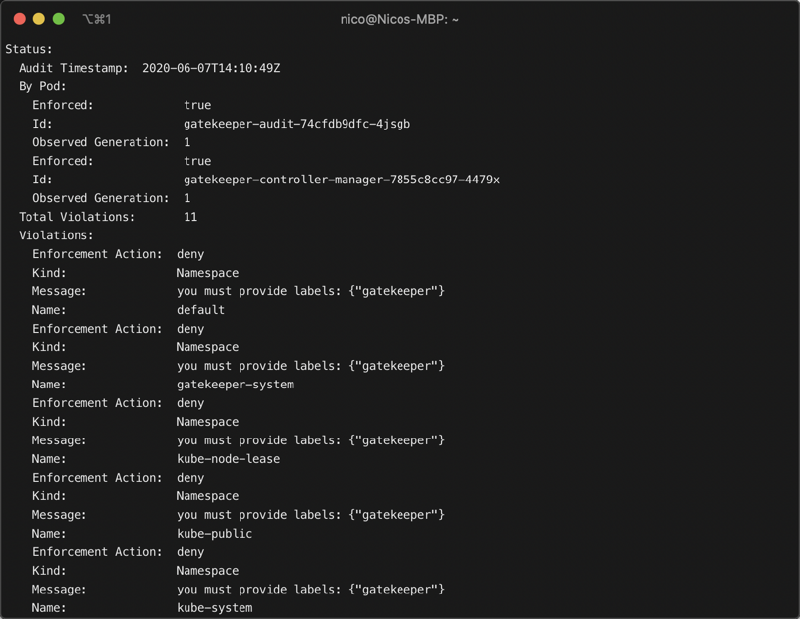

For this we can use our K8sRequiredLabels Custom Resource Definition we created above and retrieve our auditing information from the Kubernetes API server using kubectl or any other tool of our choice. In the below example I used kubectl describe K8sRequiredLabels ns-must-have-gk to get the following output:

Furthermore, Gatekeeper provides us with Prometheus endpoints that can be used to collect metrics and build dashboards and alerts based on them. Both, gatekeeper-controller-manager as well as gatekeeper-audit are exposing those endpoints which can be used to gather the following metrics:

- gatekeeper_constraints: Current number of constraints

- gatekeeper_constraint_templates: Current number of constraint templates

- gatekeeper_constraint_template_ingestion_count: The number of constraint template ingestion actions

- gatekeeper_constraint_template_ingestion_duration_seconds: Constraint Template ingestion duration distribution

- gatekeeper_request_count: The number of requests that are routed to admission webhook from the API server

- gatekeeper_request_duration_seconds: Admission request duration distribution

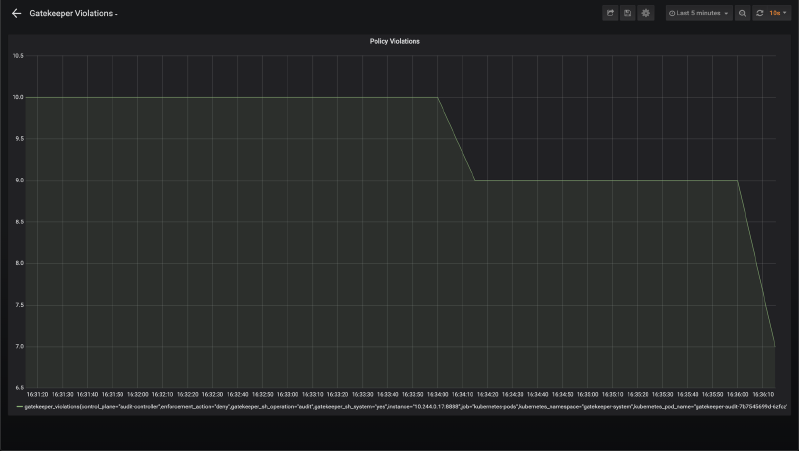

- gatekeeper_violations: The number of audit violations per constraint detected in the last audit cycle

- gatekeeper_audit_last_run_time: The epoch timestamp since the last audit runtime

- gatekeeper_audit_duration_seconds: Audit cycle duration distribution

All you have to do is make your Prometheus instance aware of it. The easiest way is to customize both deployments and add the well-known annotations to the pod specification to enable Prometheus scraping:

annotations:

prometheus.io/port: "8888"

prometheus.io/scrape: "true"

With this in place, you can collect the above Gatekeeper metrics and use them to build auditing dashboards as well as alerting.

Grafana Dashboard based on Gatekeeper metrics