This blog post is about how to deploy a virtual 4G stack using GNS3 and Kubernetes. It covers the following:

- Open5gs vEPC OAI UE and eNodeB simulator

- Kubernetes 1.17.3

- Calico CNI

- Vyos Router

- GNS3 (This is optional, it makes simulations easier)

The motivation for this blog post stems from the fact that I worked as a Packet core support engineer 3 years ago before I moved into cloud native with a focus on Kubernetes. So I decided to see if I could simulate a 4G stack using open source tools. I hope you find this interesting as I did!

Note: Some familiarity with Kubernetes and Telecommunication network is assumed.

GNS3 was chosen as a platform to deploy everything because it makes it easy to see everything at a glance and still interact with the components. While Telecom networks remain largely the same logically, the implementation usually is not the same. No two telecom network implementations are generally the same.Take the points below as just one way of implementation. There are many ways to do this especially when Kubernetes is used.

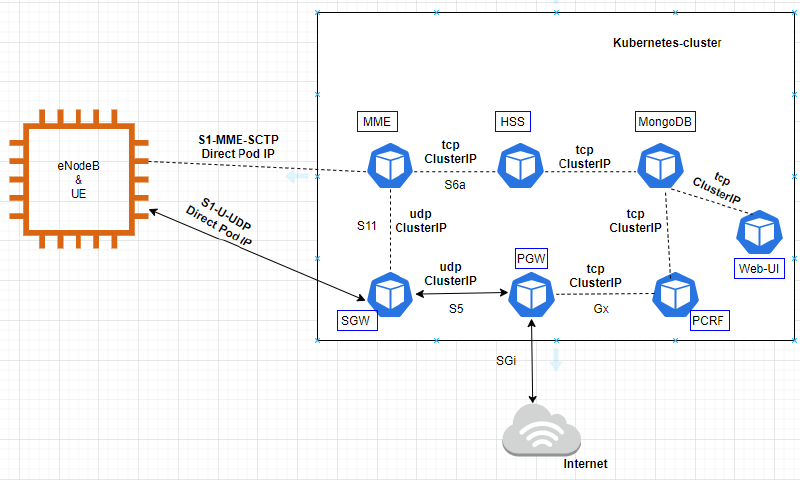

- The S1AP of the MME was exposed directly at the POD layer instead of using a service. This point is really subject to how the EPC software is developed. I chose to go this route since this avoids the requirements of enabling the SCTP flag in the Kubernetes API server, so the eNodeB connects directly to the POD instead of via a service.

- Calico was used at this stage as CNI. This gives the possibility of advertising the POD IPs directly into your L3 network. This is quite interesting in my opinion. Calico was able to advertise the POD IPs into the Vyos router, which made the routing between the eNodeB and EPC network seamless.

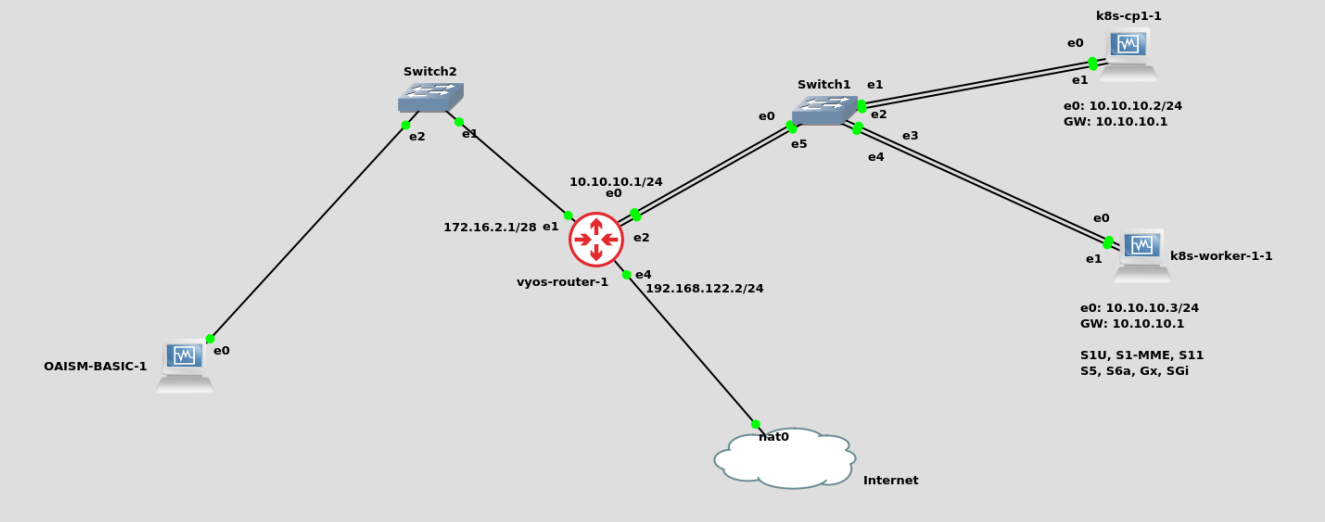

The GNS3 network diagram is shown below:

Logical topology is shown below:

The UE and eNodeB simulators are running in the same VM while the virtual EPC stack is running in the kubernetes cluster.

Kubernetes Setup

This was installed using the official Kubeadm installation documentation, Calico was used for the CNI.

vEPC Setup

The vEPC was installed using the Open5gs software.It provides the following components:

- HSS Database: MongoDB is used for this purpose (deployed as a statefulset).

- Web-UI: Web interface to administer the MongoDB database.This is used to add subscriber information.

- HSS (deployed as a statefulset)

- PGW: It combines both PGW-C and PGW-U (deployed as a statefulset)

- SGW: It combines both SGW-C and SGW-U (deployed as a statefulset)

- MME (deployed as a statefulset)

- PCRF (deployed as a statefulset)

It uses the freeDiameter project for the components that require diameter (PGW–PCRF, HSS—MME).

A single docker image was used for all the vEPC core components (excluding the MongoDB and Web-UI). Utilizing a dedicated image for each of the core EPC components may be desirable, especially to reduce the overall image size.

The manifest files can be found in the repo:

https://bitbucket.org/infinitydon/virtual-4g-simulator/src/master/open5gs/

- Create the open5gs namespace: kubectl create ns open5gs

- Deploy all the manifest files:

kubectl apply -f hss-database/ (it is adviseable to wait for the MongoDB POD to be running before proceeding with the rest)

kubectl apply -f hss/

kubectl apply -f mme/

kubectl apply -f sgw/

kubectl apply -f pgw/

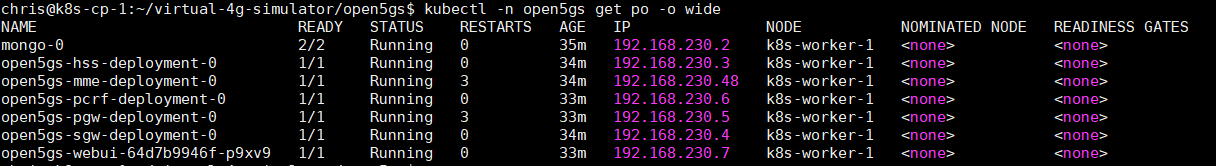

kubectl apply -f pcrf/ - Status after applying the manifests:

OpenAirInterface Simulator

The basic simulator was used. The full documentation can found via:

https://gitlab.eurecom.fr/oai/openairinterface5g/blob/master/doc/BASIC_SIM.md

Branch v1.2.1 was used.

OpenAirInterface SIM details is configured via the following file:

“$OPENAIR_HOME/openair3/NAS/TOOLS/ue_eurecom_test_sfr.conf”

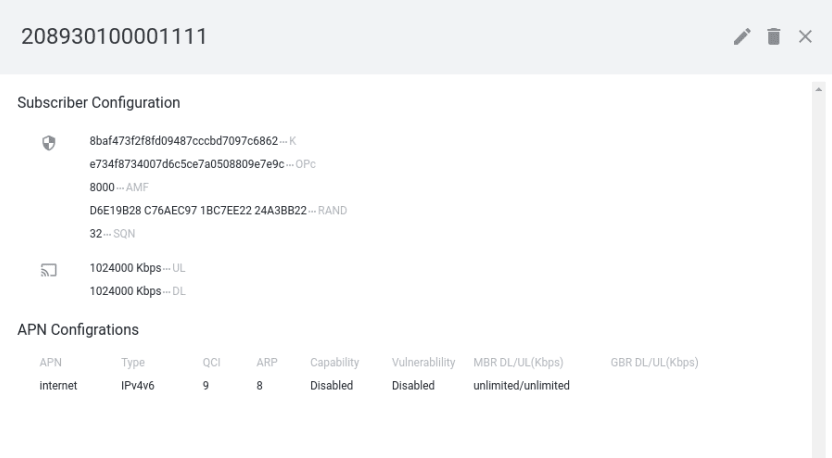

IMSI=“208930100001111”;

USIM_API_K=“8baf473f2f8fd09487cccbd7097c6862”;

OPC=“e734f8734007d6c5ce7a0508809e7e9c”;

Subscriber details need to be added to the HSS-DB (MongoD). This can be done via the web-ui POD. kubectl port-forward can be used to access the UI locally.

\ kubectl -n open5gs port-forward –address=10.10.10.2 svc/open5gs-webui 8888:80

You should adapt the forwarding parameters depending on how you want to do this.

The eNodeB is initialized using the following:

cd oasim_repo_folder

source oaienv

cd cmake_targets/lte_build_oai/build

ENODEB=1 sudo -E ./lte-softmodem -O $OPENAIR_HOME/ci-scripts/conf_files/lte-fdd-basic-sim.conf –basicsim > enb.log 2>&1

The UE is initialized using the following:

cd oasim_repo_folder

source oaienv

cd cmake_targets/lte_build_oai/build

../../nas_sim_tools/build/conf2uedata -c $OPENAIR_HOME/openair3/NAS/TOOLS/ue_eurecom_test_sfr.conf -o .

sudo -E ./lte-uesoftmodem -C 2625000000 -r 25 –ue-rxgain 140 –basicsim > ue.log 2>&1

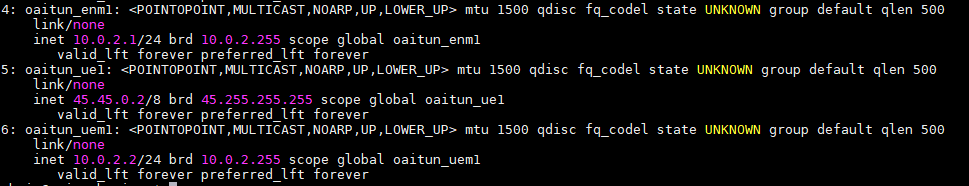

OpenAirInterface Interfaces After eNodeB and UE are running

oaitun_enm1-eNodeB interface (UE will connect to it, they are in the same IP network)

oaitun_uem1-UE interface towards the eNodeB

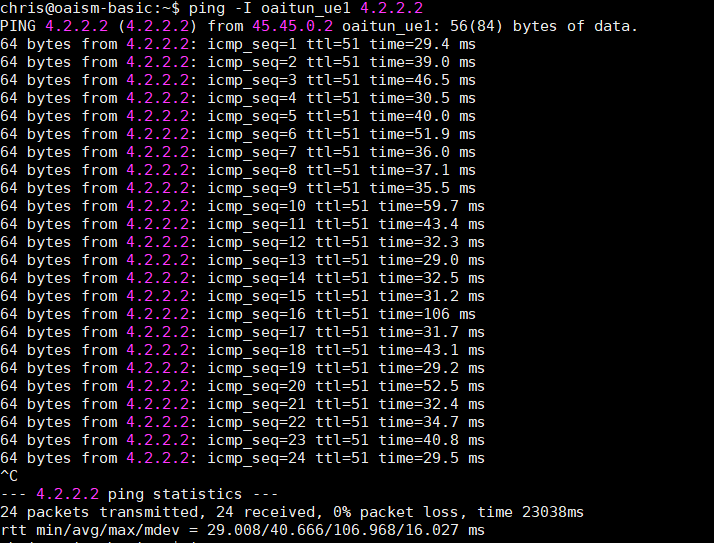

oaitun_ue1-UE interface that is used to communicate to the PGW, OAI will assign the PGW allocated UE IP address which is 45.45.0.2 in this case.

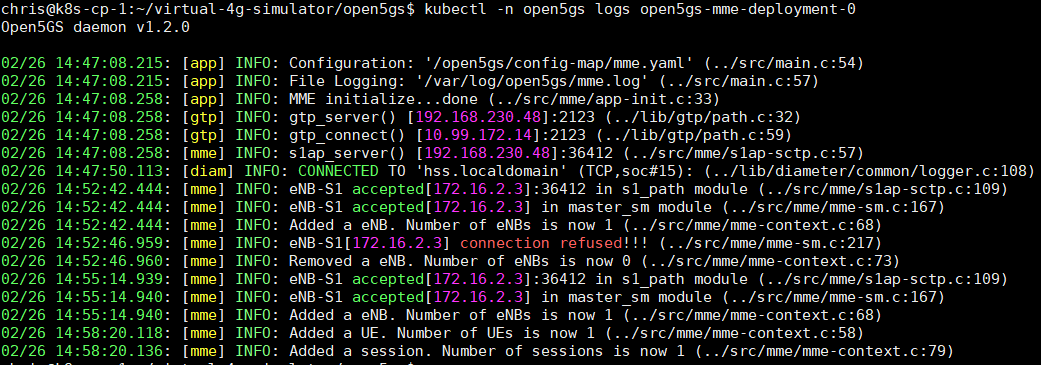

Open5gs MME Status After eNodeB and UE are running:

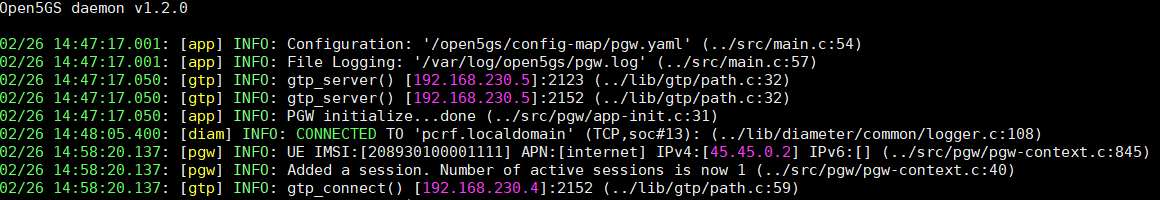

Open5gs PGW Status After eNodeB and UE are running:

UE imsi and IP address can be seen above, this matches what is in the UE configuration/status

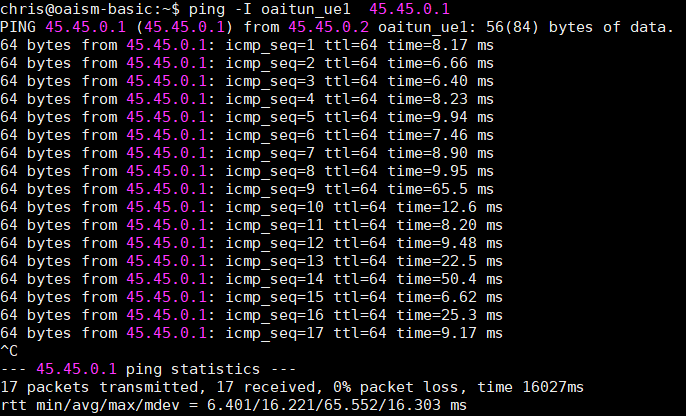

PING Tests From UE to PGW:

PING Tests From UE To The Internet Via PGW:

In conclusion, the following points should also be noted:

- Operators might help with the life-cycle management of EPC components especially since the Kubernetes design will ultimately be influenced by how EPC vendors engineer their solutions.

- NAT translation was implemented in the PGW pod, this can be offloaded to a CG-NAT solution. The routing implementation will have to be re-designed since the PGW IP-POOL will have to be advertised one way or the other. Vyos can also be used for this purpose.

- Calico-vpp integration is currently ongoing, this will give some improvement in the data-plane especially where SR-IOV is not feasible (already cloud platforms like AWS have enhanced network adapters/instances that can further improve the performance)

Learn More

https://docs.projectcalico.org/reference/resources/bgppeer#bgp-peer-definition

https://gitlab.eurecom.fr/oai/openairinterface5g/blob/master/doc/BASIC_SIM.md

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/